Could an animal learn to program?

Created on Nov. 11, 2011, 5:54 p.m.

This is something I asked myself innocently, not in any attempt to see if I could hire a band of monkeys to finish my programming homework. But delving into it a bit more I saw how this question really unearths some of the most interesting parts of Theory of Computation, Formal Language Theory and Evolutionary Linguistics. Though a bit of a wild ramble, understanding this question gives really quite a beautiful overview of how Computer Science approaches problem solving and shows how it isn't just physics, maths or philosophy which can be profound.

Communication With Animals

My first thoughts jumped to questions of communication with animals. This is a little bit of a mini obsession for me. Like the Voynich Manuscript it is far too tempted to think you'll find some hidden profound meaning behind the code. Also in many ways programming and giving commands seem very similar to the problems we solve in communication. So how possible would it ever be to talk to animals?

The first issue with talking to animals is in the way they vocalize sounds. Unlike humans most animals including apes appear to have little to no control over what they vocalize. Unlike a "language", or exchange of information via some protocol, they will simply exclaim and vocalize feelings. In this way the clucking of a chicken is no more than the repeated exclamation of "I'm okay". They aren't actually chatting about the weather and gossiping in the sense which we personify them. Most research shows animal vocalization is more similar to a kind of permanent tourettes like condition. Any attempt to train an animal to control their vocalization has never really worked.

But what about sign language? There have been various studies trying to get chimps to learn it. The most famous being Nim, who was raised in a human family and taught American Sign Language in an attempt to show that communication between animals and humans was possible (to refute Noam Chomsky's idea that language was innate to humans). What they found though was that Nim lacked the real ability to use language in the way that we do. He was able to learn and repeat phrases in appropriate contexts but never showed any ability to construct new sentences using the rules, syntax or grammar of the language. This is so key to human communication it is something a child has learnt by the age of five. His longest sentence - "Give orange me give eat orange me eat orange give me eat orange give me you." Not as profound as the animalistic insight I always longed for. You can see his full list of philosophical statements here.

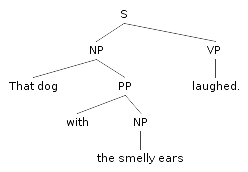

So what does this have to do with programming. Well Chomsky had a name for this property of using the rules of language to construct new sentences. It was called Generativism and could be modelled by something called a Context Free Grammar, a thing we also use to define programming languages.

Formal Languages

A Context Free Grammar is a class of Formal Language which is characterized by a set of "production rules". These rules let you generate valid strings (sentences, parts of text) of this language and also give you mechanisms for reading a string and checking if it is a valid sentence. The production rules are often written in terms of each other, which allows you to recursively build sentences with repeated, recursive structures - in a way that matches human language and appears to be the property which other animals lack when it comes to communication.

You can imagine these rules being something like the following:

Text -> Zero or more Sentence

Sentence -> NounPhrase then VerbPhrase then NounPhrase

NounPhrase -> (Adjective then NounPhrase) or Nothing

etc.

The context free grammar is so powerful that it can be used to describe the structure of the vast majority of human languages. And we also use Context Free Grammars to describe the structure of almost all modern programming languages. This is made intuitively clear by the way in which programming languages build themselves of these recursive constructs. For example within an "if" statement can go any list of other statements including another "if" statement itself. Arithmetic expressions can contain arbitrarily complex other arithmetic expressions inside of themselves if we use some brackets.

So does this mean it would be impossible for an animal to learn to program, as evidence would appear to show they don't have this ability to use Context Free Grammars? We can design an experiment to find out.

The Experiment

Designing any test for this sort of thing is exceptionally hard. The problem is that animals have a strong ability to pattern match and memorize solutions without actually doing any problem solving. Feynman explains this beautifully in his 1974 speech about Cargo Cult Science:

"But in 1937 a man named Young did a very interesting one. He had a long corridor with doors all along one side where the rats came in, and doors along the other side where the food was. He wanted to see if he could train the rats to go in at the third door down from wherever he started them off. No. The rats went immediately to the door where the food had been the time before.

The question was, how did the rats know, because the corridor was so beautifully built and so uniform, that this was the same door as before? Obviously there was something about the door that was different from the other doors. So he painted the doors very carefully, arranging the textures on the faces of the doors exactly the same. Still the rats could tell. Then he thought maybe the rats were smelling the food, so he used chemicals to change the smell after each run. Still the rats could tell. Then he realized the rats might be able to tell by seeing the lights and the arrangement in the laboratory like any commonsense person. So he covered the corridor, and still the rats could tell.

He finally found that they could tell by the way the floor sounded when they ran over it. And he could only fix that by putting his corridor in sand. So he covered one after another of all possible clues and finally was able to fool the rats so that they had to learn to go in the third door. If he relaxed any of his conditions, the rats could tell."

And this is a rat. An ape will go to extraordinary means - memorizing vast sequences of actions - to gain food. In the experiment we have to be sure that the animal is truly solving the problem and not, like Nim, using it's memory and contextual clues to come up with a somewhat appropriate answer.

One of the most basic languages that can be expressed by a Context Free Grammar is the language of palindromes - that is any string where by the second half is the same as the first half but in reverse. If an animal could not recognize palindromes it would strongly suggest that there is no chance of them producing a valid program in a conventional programming language.

If we build a machine with a number of buttons on it, and each button has a certain symbol, this can act as our alphabet. We then program this machine such that it dispenses food whenever the buttons are pressed in a way that they represent a palindrome. For example pressing banana, apple, pear, apple, banana would give food but pressing banana, apple, apple, pear would not.

To avoid the animal just pattern matching we should disallow it to use the same string twice to get food or at least have a very long time delay on when it can be reused. It would also seem sensible to ignore palindromes of length two as these are an easier problem and can be recognized by something simpler than a Context Free Grammar.

A greater food reward should be given for longer palindromes as this would encourage the animal to show he/she could solve palindromes in general.

We also need a notion of string termination. I figure the best option would be to have lights behind the buttons. These lights go out and disable the buttons for a few seconds whenever the entered string cannot possibly be a palindrome. If a string is a palindrome then the same thing should happen, but food should come out. Perhaps buttons being disabled when the light goes out is too human a notion and a sound should also be associated with button presses and disables.

Finally is the issue of explaining to the animal what we wish for them to do. It would seem unfair just to sit them there without any explanation because this is not how humans approach problems. In many ways this is the key issue of validity in the experiment and also the catch. Does the ape need some notion of a palindrome to be able to understand the problem in the first place? Then it would seem like a experiment of expression rather than problem solving. What exactly are we finding out here? If animals have the ability to "learn" programming, or if they were born with the innate skills?

I feel the easiest way to get an intuitive notion of this is just to try it. If the animal shows no interest at all perhaps we could have a human demonstrator the animal can watch - then at least he might associate pressing the buttons with getting food and try something.

The Hierarchy

I said that the language of palindromes is one of the most basic Context Free Grammars, and also that palindromes of length two are actually an "easier" problem. What did I mean by this? Why is programming so tied to Context Free Grammars and what is an "easier" grammar?

As well as Context Free Grammars there exists a whole hierarchy of grammar types. Each grammar type is able to describe languages of certain complexities. The language of length two palindromes which is actually the language of two identical symbols in a row can be recognized by a simpler type grammar than Context Free, called a Regular Grammar, which can be read using a machine called a Finite State Automata. There are in total four main types of grammar with several sub-types in-between. Each type corresponds to a certain computer or automata which can only recognize that set of languages (as well as the languages in the levels below which are simpler).

So if there is a whole hierarchy with different complexities - which correspond to a kind of problem solving ability, and we have a way to test where a certain thing exists on this hierarchy, this opens up a whole load of interesting questions. For example is it possible to program using a simpler grammar than Context Free? Where are humans compared to other animals on this hierarchy? Is the reasoning of normal everyday computers expressed by it?

I'll start with the first - is it possible to program using a simpler grammar? Yes, sort of. For example think of a programming language which is just is a series of simple commands you give a machine. "Move forward", "Turn 45 degrees", "Move backward", etc. If each unique command we give a unique symbol in our alphabet then in fact any string is a "valid" program. But this doesn't mean it actually does anything useful or solves a problem.

What we've done is just passed the problem part of communication onto the semantic processing - working out what the program you've written actually does and if what it does is a valid series of commands in context. And actually this is harder for humans than just using a Context Free Grammar because you have to deal with everything at once.

What about our position on this hierarchy. Where are we, and what about the computers? Even more importantly, could there be some conceptual being which can use more complicated grammars than us, and therefore can in some way solve harder problems, is more intelligent?

You many be relieved to hear that we are at the top. Though the top only exists as a kind of arbitrary ceiling. Things begin to get a bit more fuzzy the higher you rise. Perhaps some form of weird Quantum computer could end up being more expressive than us in some ways, though these truly are the dry areas of academic categorization and not the sign that we are heading toward a Technological singularity with computers designing and building new computers ever more intelligence than their predecessors.

Everyday computers, too, are at the top. Which means in theory they can solve all the problems a human should be able to. This is quite interesting if you consider how we think of them as simplistic automata. As our palindrome experiment would probably show, animals are far more automata like when we look at their problem solving ability. Computers, and the programs they run, are as expressive, smart, and diverse as the creatures programming them. And this is not even as you might expect, the result of electronic circuits just being a very diverse human tool. Computers have tried to capture the notion of humans solving problems since beginning of their creation. The word "computer" was originally applied to humans who performed complex astronomical and maritime calculations, and when Alan Turing was first talking about the Turing Machine and what it means to "compute" something, he was still talking about it in a human sense.

SATs for animals

If we have this hierarchy of languages could we design tests for each level like a kind of global intelligence SAT and then nominally rate animals on their intelligence?

Well we could do this, but it might end up as a very communication and human-like bias scale of intelligence. Remember that I said humans are at the top of the hierarchy (a type-0 machine), and that they can recognize all of the most complicated languages, solve all of the most complicated problems. Well why is this the case if most of human communication is done via Context Free Grammars, a simple type-2 grammar near the bottom of the scale?

It is because communication with each other is not the hardest problem we've been able to solve, and far from it. For example the image recognition abilities we show when we just look around the room. This is definitely more complicated than anything we can model using a Context Free Grammar. And also when we build computers this is a far harder problem for them to solve too (computers are pretty good at natural language processing these days).

If we rate animals based on these kind of experiments we might be performing this exact same underestimation because we are proposing the problem to them in terms of communication and language recognition rather than what they are best at. These animals might exhibit complicated problem solving behaviours in other domains.

For example it is known pigs are good at computer games. And monkeys seem fairly capable in mind control.

Evolution in the Hierarchy

How far are animals from our communication abilities? Such an broad question is hard to answer, but it does open up an interesting flaw with our current model.

I said that this hierarchy of languages contains four levels and each level corresponds to problem solving ability. Or to put it more clearly if an animal cannot recognize a language at one level then it implies there are a whole class of more complicated problems that are beyond it's reasoning capability. But this stepped categorization doesn't make much sense. It isn't like one day some human was just born who could recognize Context Free Grammars. There must have been a long and slow evolution toward it with many stages inbetween. This notion is quite peculiar to a computer scientist. When we build a computer either it can solve some class or problems or it can't. It can't be "okay" at solving this problem, or only solve it occasionally. Computers are more deterministic than that.

So how and why did humans gain this ability?

The answer to how lies in the idea I talked about before, of palindromes of length two being an "easier" thing to recognize than any length palindromes. There is going to be a similar case for almost all problems. Some trivial or small cases of problems are easier to solve than the problem in general. For example consider palindromes of length three. We can recognize this language without a Context Free Grammar; just by enumerating all the combinations. If we have an alphabet of just "a", "b" and "c". We can give a rule to generate length three palindromes without actually knowing anything about the nature of a palindrome. Namely:

Generate if "aba" or "aca" or "bab" or "bcb" or "cac" or "cbc"

This kind of "cheating" doesn't seem like it helps very much for a case such as learning how to make palindromes, but from looking at the evolution of natural language it really becomes clear as to why it works.

The main theory for the reason natural language evolved toward a Context Free Grammar is based on empathy and coorporation. As proto-humans became more reliant on cooperation and needed easy ways to express feelings about other creatures they evolved constructs which began to resemble the recursion we see in Context Free Grammars. For example they needed a way to say "You see mammoth?" As well as just what they were able to say before - "I see mammoth!". One small modulation doesn't equal a Context Free Grammar but it is clear to see how a whole stack of modifiers soon starts to create these recursive structures.

Animals and programming

People sometimes ask me "Why hasn't anyone invented a programming language which is just like plain English?" The answer isn't because it would be too hard to make. The answer is because it wouldn't be very good to use. There is too much ambiguity in natural language and if such a programming language did exist you would soon get frustrated when it continued to misunderstand you and your program started doing not what you intended. The way in which we have combined our communication abilities so tightly with our reasoning abilities is not something that ties us down - it is what gives us true expressive power in programming.

There are two main things that I think allow humans to program. One is their ability to reason - which can be as simple as understanding cause and effect or as complicated as doing mathematical proofs for complex formulae. This is something it is clear animals have to a reasonable degree. Even if in many cases they appear to take short-cuts or use memory and sense data rather than logic.

The second is the ability to communicate - to express specifically truths about the world. Separate to reasoning this is something other animals can't "pretend" to have. It seems fairly clear they lack the key properties in many areas, and this is why I think they ultimately will never program.

Other animals lack the expression of humans - any language they could program in would be so crippled that it would be little more than a small set of commands. Animals have shown they have the raw brain power for feats of memory and basic pattern matching but it is curious they have still found no way to apply this to more complicated reasoning. It is unclear if learning how to use complex language based on Context Free Grammars gave us the problem solving abilities associated with it, or if things evolved the other way round, but what is clear is how connected they are. A term like Finite State Automata is a very clinical description for animals' processing abilities, but it might be approriate if it again shows how important communication is to us, and how far it sets us aside as humans.

Footnote: Recognizing a class of language is like solving class of problems.